Information about the compute capability on the current system can be retrieved using CUDAInformation. The compute capabilities determine what operations the device is capable of.

While a general performance consideration is to keep local memories to a minimum since the memory accesses are slow, they do not have the same problems as global memory. Local memory is local to each thread, but resides in global memory unless the compiler places the variables in registers. On current hardware, the amount of shared memory is limited to 16 KB per block. Shared Memoryįast memory that is local to a specific block. The memory is cached, but limited to 64 KB globally. Constant Memoryįast constant memory that is accessible from any thread in the grid. On the flip side, only char, int, and float are supported types. Texture memory does not suffer for the performance deterioration found in global memory. Texture memory resides in the same location as global memory, but it is read-only. The general rule is that performance is deteriorated if global memory is accessed more than once. The performance constrictions on global memory have been relaxed on recent hardware and will likely be relaxed even further. All threads can access elements in global memory, although for performance reasons these accesses tend to be kept to a minimum and have further constrictions on them. This is the memory advertised on the packaging -128, 256, or 512 MB. The most abundant (but slowest) memory available on the GPU. The following figure depicts all types of memory available to CUDA. With the Wolfram Language, you only need to write CUDA kernels.ĬUDA memory is divided into different levels, each with its own advantages and limitations.

/article-new/2020/02/shazamshortcut.jpg)

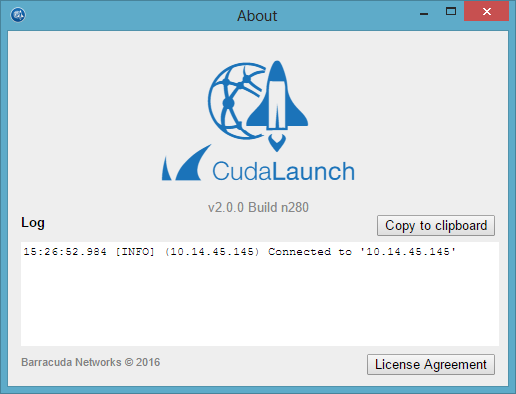

When using the Wolfram Language, you need not worry about many of the steps. Once the threads have completed, memory is copied back from the GPU to the CPU. Synchronize the CUDA threads to ensure that the device has completed all its tasks before doing further operations on the GPU memory.Ħ. Configure the thread configuration: choose the correct block and grid dimension for the problem.ĥ. Copy the memory from the CPU to the GPU.ģ. GPU and CPU memory are physically separate, and the programmer must manage the allocation copies.Ģ. The above figure details the typical cycle of a CUDA program.ġ. The gist of CUDA programming is to copy data from the launch of many threads (typically in the thousands), wait until the GPU execution finishes (or perform CPU calculation while waiting), and finally, copy the result from the device to the host. The one-dimensional cellular automaton can map onto a one-dimensional grid. In the case of image processing, for example, you map the image onto the threads as shown in the following figure and apply a function to each pixel. In terms of the above image, a grid, block, and thread are as follows.Ĭhoosing whether to have a one-, two-, or three-dimensional thread configuration is dependent on the problem. The following shows a typical two-dimensional CUDA thread configuration.Įach grid contains multiple blocks, and each block contains multiple threads. Unlike the message-passing or thread-based parallel programming models, CUDA programming maps problems on a one-, two-, or three-dimensional grid. The following sections will discuss this, along with how threads are partitioned for execution. The reason CUDA can launch thousands of threads all lies in its hardware architecture. CUDALink's equivalence to CUDALaunch is CUDAFunction. The above launches 10000 ×10000 threads, passes their indices to each thread, applying the function to InputData, and places the results in OutputData. If the following is your computation:ĬUDALaunch From a high-level standpoint, the problem is first partitioned onto hundreds or thousands of threads for computation. This is done by utilizing all levels of the NVIDIA architecture stack.ĬUDA's programming is based on the data parallel model. With the Wolfram Language, you only need to write CUDA kernels. CUDALink aims at making GPU programming easy and accelerating the adoption. While programming the GPU has been around for many years, difficulty in programming it had made adoption limited. The Common Unified Device Architecture (CUDA) was developed by NVIDIA in late 2007 as a way to make the GPU more general. In the end, many applications written using CUDALink are demonstrated.

CUDALAUNCH SLOW HOW TO

This document describes the GPU architecture and how to write a CUDA kernel. I have edited the saxpy.Loads a CUDA function into the Wolfram LanguageĬUDA programming in the Wolfram Language. Unfortunately, compilation fails and I don’t know how to debug.

CUDALAUNCH SLOW CODE

This code is some university assignment that I am trying out. I am compiling some code that runs saxpy on GPU.

0 kommentar(er)

0 kommentar(er)